Scaling a $200M Giving Initiative with AI: Automating Pledge Card Processing Using AWS Textract

This Project At a Glance

I led the design and rollout of an AI-assisted pledge card processing workflow that helped North Point Ministries scale a $200M giving initiative by reducing manual data entry, preserving data accuracy, and enabling staff to process commitments faster during peak campaign moments.

Client: North Point Ministries (Digital Services Team)

Platforms: AWS Textract, AWS Lambda, Amazon S3, Apple Shortcuts

Scope: Product strategy, workflow design, AI integration, human-in-the-loop UX, rollout

Audience: Campus staff, digital, and finance teams processing campaign pledges at scale

The Challenge

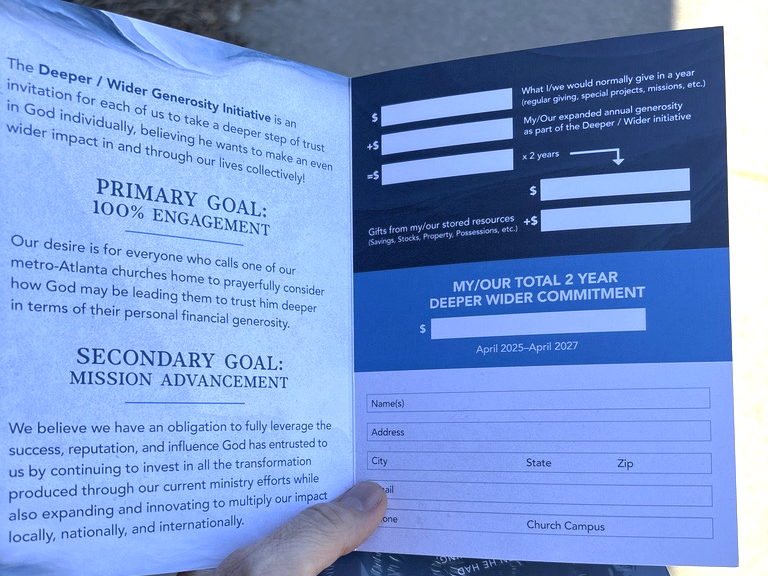

A $200M giving initiative meant thousands of handwritten pledge cards arriving in a short window, each with significant variation in legibility, completeness, and format, pushing manual data entry to its breaking point while accuracy and trust remained non-negotiable.

The Outcome

We introduced a lightweight, iPhone-based AI scanning workflow that allowed staff to process pledge cards roughly twice as fast as manual entry while preserving human judgment where confidence was low and scaling smoothly during peak campaign moments.

About This Project

The Context: An Incredibly Messy Problem at Campaign Scale

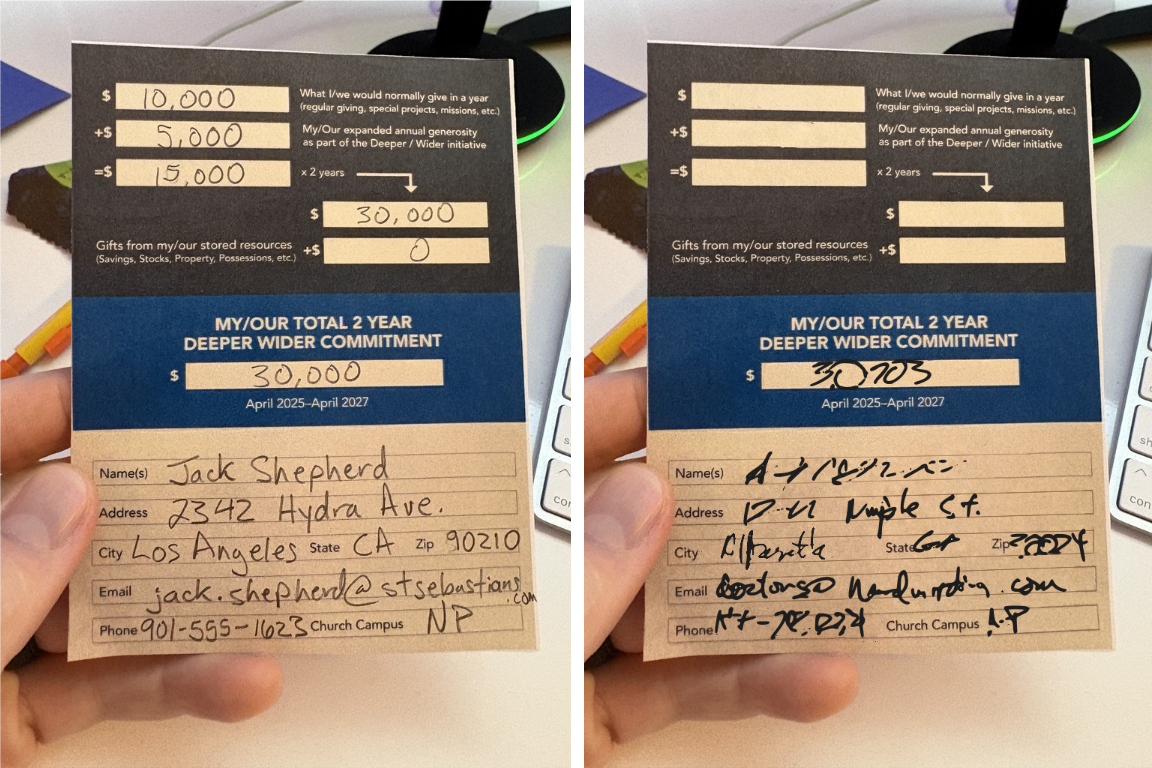

Handwritten pledge cards are one of the hardest inputs to normalize at scale. Every card is different. Handwriting varies wildly. Cards are folded, smudged, incomplete, or partially illegible. Some are pristine. Others are barely readable.

During the spring campaign, thousands of these cards arrived in a short window. Even with a capable team, brute-force manual entry created a perfect storm of slow throughput, high cognitive load, and avoidable errors. The challenge wasn’t just speed. It was accuracy, fatigue, and trust, all at the same time.

Before designing anything, I spoke with leaders at Crossroads Church, who had recently completed a major giving campaign of their own. They shared what surprised them, where things broke down, and what they wished they had done differently. We took those learnings, adapted them to our context, and intentionally looked for ways to improve on the model rather than simply copy it.

Reframing the Problem: Speed and Judgment

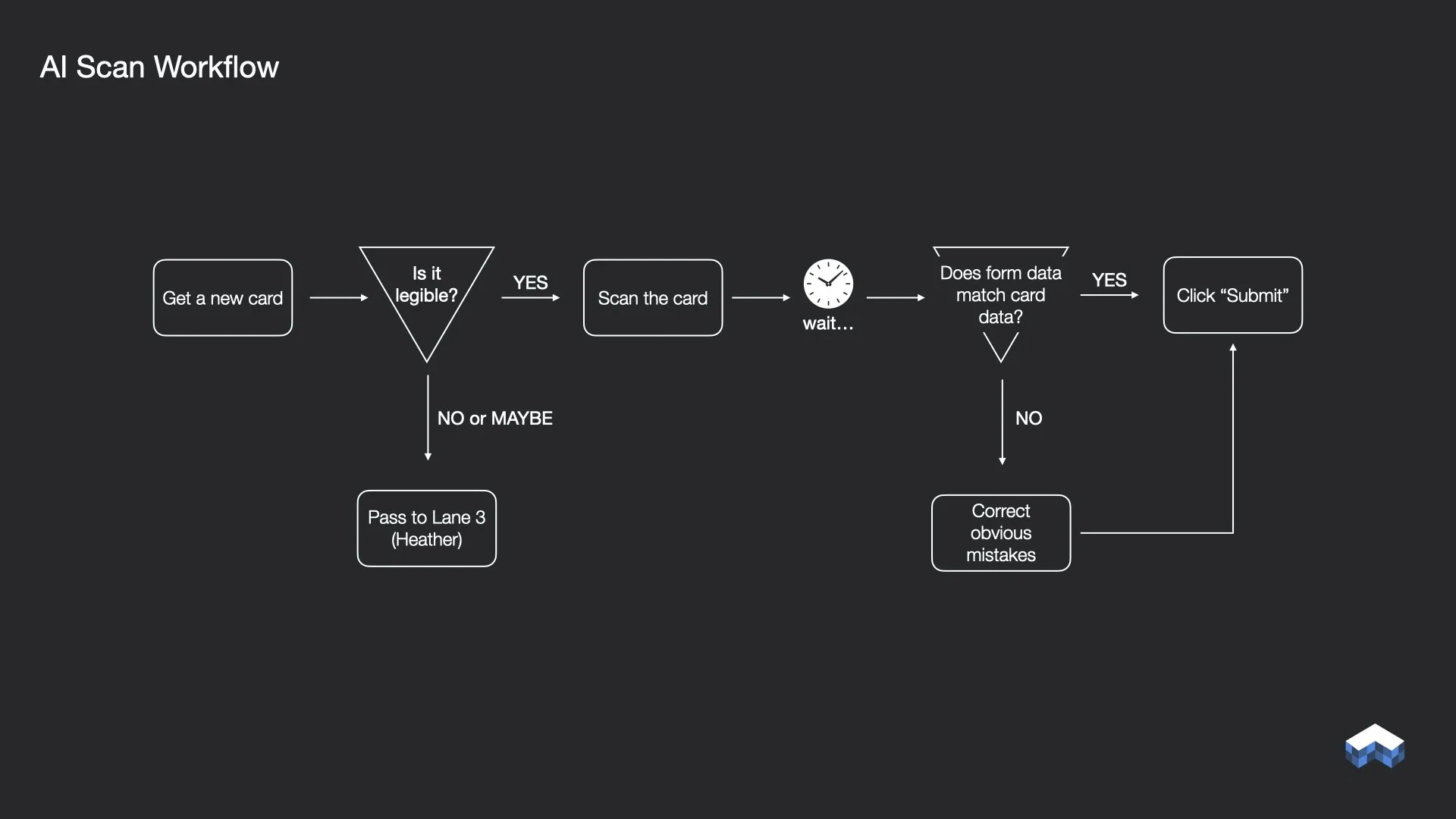

We knew AI-based scanning would be faster and less error-prone than manual entry, but we also knew it would never be perfect. The real design question wasn’t “Can AI read these cards?” It was “How do we design a workflow that knows when to trust automation and when to defer to a human?”

I designed the end-to-end process to acknowledge reality. Cards were intentionally triaged into three paths:

Cards clear enough for AI-assisted processing

Cards that clearly required a human to step in

Cards so incomplete or illegible that follow-up with the giver was the only responsible option

That workflow design mattered just as much as the technology.

A Scrappy, Mobile-First Solution

Early experiments with mass document scanners failed. Cardstock thickness and folds made results inconsistent and unpredictable. What we really needed was a clean image, fast.

That’s when I realized the best scanner was already in everyone’s pocket.

I wrote an Apple Shortcut from scratch that turned an iPhone into a front-end capture and validation tool. The Shortcut resized and normalized images on-device, made API calls to AWS, and returned extracted data to the user in real time. The entire scan-to-review loop took seconds.

Behind the scenes, images were processed using AWS Textract, normalized via Lambda, and returned as structured JSON with confidence scores. On the device, the Shortcut guided users through confirming or correcting only the fields that actually needed attention, then pushed clean data directly into Rock via API, creating or updating records as appropriate.

The experience was fast, intuitive, and honestly a little fun to use.

The Impact

After I conceptualized and tested the workflow, it performed as well as we could have hoped. Even as the team took a both/and approach to data entry, some entering cards manually and others using the scanner, the results were clear.

Staff using the scanning workflow were able to process roughly twice as many cards per minute as manual entry, with fewer errors and far less fatigue. Accuracy remained high, confidence in the data stayed intact, and the system scaled smoothly during peak moments.

All of this was achieved with:

No custom app

No new hardware

No added headcount

Minimal training

Why It Mattered

This project mattered because it showed how good product leadership blends strategy, empathy, and pragmatism.

Rather than forcing a perfect system or over-engineering a solution, I designed something that respected human judgment, embraced real-world messiness, and still delivered meaningful efficiency gains under pressure.

It proved that with the right framing, a scrappy approach, and thoughtful use of AI, even the messiest problems can be made tractable, without sacrificing trust, accuracy, or people along the way.